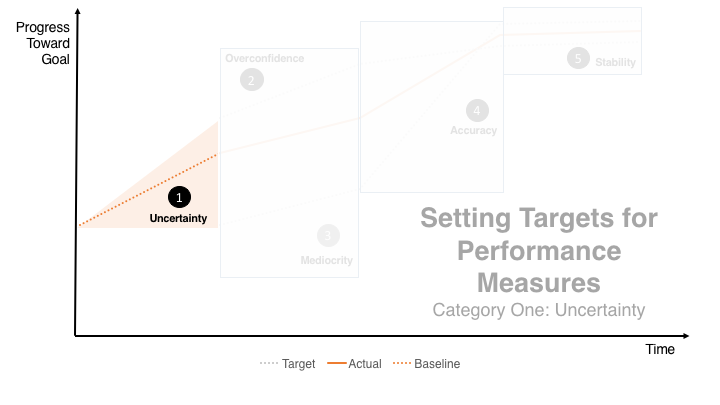

Category One – Uncertainty

Uncertainty occurs when a city has no baseline data about a performance measure, making it difficult to set appropriate targets without guessing. This most commonly occurs when a measure or program is new, or when historical data is not available due to a transition to new data systems.

Signs of uncertainty:

- No baseline data exists (yet).

- People are unwilling or uncomfortable setting targets for this measure.

- People are not confident in the way the measure is being calculated.

- People express a lack of control over the outcome

- The measure relates to a sensitive issue for which setting targets creates downstream political, legal, or moral consequences (e.g., staff downsizing).

Figure 2

What to do with uncertainty?

- Consider a proxy measure. Infant mortality rates are a direct measure of healthcare quality but are also a proxy for the economic and social welfare of a community. The unemployment rate is a direct measure of unemployment, but is also a proxy for the overall state of our economy. Proxy measures can be powerful tools for governments who do not have the exact data they want, but know the outcome they are trying to achieve. Both examples given here are commonly used, defensible federal measures.

- Take advantage of benchmarking. This measure may be new for your city, but another city may already have data you could learn from.

- Make an informed guess. Department heads, division managers and frontline staff have vaulable insight and informed instincts about what is achievable. Rely on your internal expertise until better information is available.

- Remember, targets can be revisited. Targets are meant to drive progress on your priorities; and GovEx advises against changing targets during the performance period. However, revisit the target next year with new performance data and a better understanding of capabilities.

- Ask an outside expert. Reach out informally to an expert in the field: universities and think tanks have a wealth of experts willing to share their knowledge about “what good looks like” based on research and experience.

- Consider not setting a target. It is okay to wait for baseline data, just do not wait too long.

- Use this time wisely.

- Create a data collection schedule and stick to it. People often use “baselining” as a delay tactic, so create a schedule outlining all of the data elements for collection and make sure the baseline data collection is occurring on that schedule.

- Decide on the analytical methods in advance. Know exactly how to approach the data analysis and resolve any software issues that may hinder data analysis and data visualization once the data is available.

- Engage stakeholders and communications channels so everyone understands the importance of a data-informed practice – there will not be as much time for those conversations once the results arrive.

What not to do during uncertainty?

- Do not give up. The absence of baseline data does not justify inaction. If a data collection effort is too costly, consider an existing proxy measure. However, if the time, resources, and political capital are available to collect new data, do it. We never walked on the moon until we did, and data collection is probably not rocket science.

- Do not confuse reluctance with incapacity. Many capable program managers are reluctant to set targets for their program. They often view it as an oversimplification of their program’s complexity or are understandably afraid to fail. But reluctance is not the same as an incapacity to set targets. If it is possible to set a target, then set a reasonable target that will comfortably stretch capable managers.

- Do not be surprised when setting a target reveals a bad underlying measure. Sometimes it is hard to distinguish good measures from bad until someone sets a target. Setting a target triggers a confrontation with the measure itself and how it is calculated. It is often the first time people see what they will be held accountable for, and they do not control one or all of the inputs to the calculation. In situations like this, where target setting generates unrest about the underlying measure, focus on getting consensus about the measure before you attempt to set a target.